GoCD optimisation – push-style webhooks for pipeline scheduling

Our last GoCD blog post looked at our journey with GoCD since 2012. Today, we are sharing our GoCD optimisation work, explaining how we have improved push-style webhooks for pipeline scheduling.

Catalyst’s Cloud Infrastructure Team work with GoCD day in, day out. To date, we have created 535 pipelines.

How push-style webhooks for pipeline scheduling work

Most of the pipelines created by the Catalyst Team have been created with two Git repositories associated with them: one for the primary code and the other for the upstream project. This enables us to automatically merge in security patches.

By default, the GoCD server polls the two Git repositories looking for changes, to decide if a new pipeline build needs to be started. This polling approach leads to:

• Continuously high CPU usage on the GoCD server;

• Continuous Git checkout traffic from Gitlab to GoCD, and;

• Potentially long wait times between a code push from developers and the pipeline starting.

System hooks

Gitlab has the ability to fire a HTTP request off for every Git push event. These requests contain a JSON payload, detailing what has changed. You can find more information here: https://docs.gitlab.com/ee/administration/system_hooks.html

The JSON object contains two pieces of information that we need:

• The Git URL, and;

• Details of the branch the commit was pushed to.

GoCD API

The GoCD API server has an API that can be used to trigger a pipeline. You can find the details at this page: https://api.gocd.org/current/#scheduling-pipelines

The system hooks that Gitlab generates are not compatible with the GoCD API.

The optimisation solution – middleware

The Catalyst Team wrote a Python application to overcome the challenges in the existing process: The solution:

• Maintains a cache of all pipelines and their associated Git materials and branches;

• Receives Gitlab system hooks events;

• Looks up the correct pipelines for matching materials and pipelines;

• Schedules pipelines to run as required. Will make multiple API calls to GoCD if a Git repository is used on more than one pipeline, and;

• Refreshes the internal pipeline cache whenever the repository that defines the pipelines is changed.

The difference the solution made

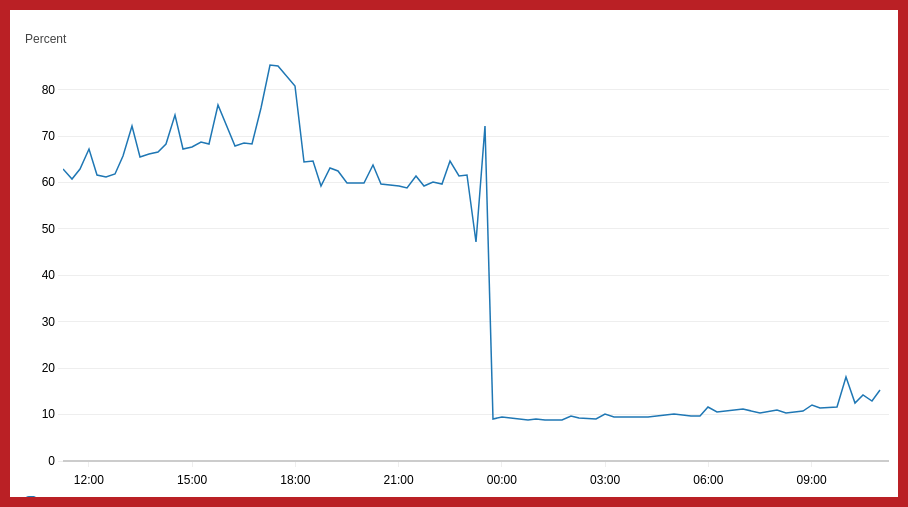

Reduced CPU utilisation on the GoCD server

CPU utilisation on the GoCD server fell massively as soon as polling on the internal pipelines was turned off. Going forward, it’s likely we will make further improvements as we turn more external repositories over to the new push-style notifications.

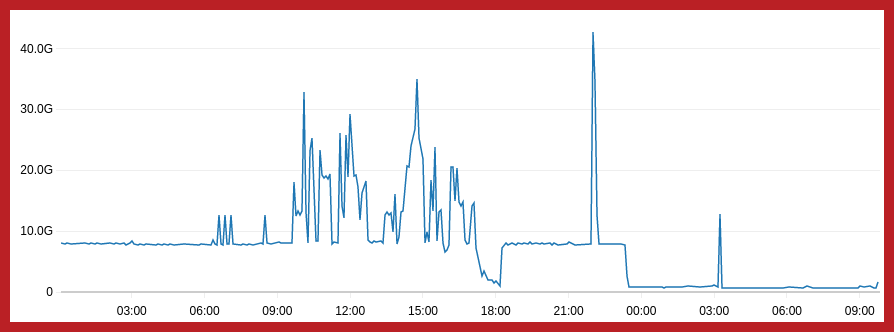

Lighter network traffic from GitLab

The network traffic from GitLab showed a big drop, the consistently high baseload of 8GB/hour that was previously needed, is no longer required.

Improved GoCD response times

By default, GoCD polling of materials occurs every minute, with a maximum of ten materials at a time. In practice, this means that developers can wait up to five minutes for a pipeline to kick off after a Git change. With the new process in place, this now takes just a few seconds.

Cost savings

The new solution has delivered a number of cost savings, as:

• The GoCD server and Gitlab servers now run on smaller AWS instances;

• Gitlab to GoCD server traffic is substantially down, which means there is a significant reduction in cross-AZ traffic;

• The GocCD server needs less internal disk space to cache infrequently run pipelines, which means smaller EBS volumes, and;

• Developers spend less time waiting for pipelines.

Innovation in Managed Services

This initiative is a great example of the work the Catalyst Team deliver to ensure our cloud infrastructure is optimised, industry leading and cost-effective. Delivering and maintaining high performance solutions for our enterprise-level, multi-region clients is our responsibility and at the forefront of everything we do.

Explore our client case study

Find out more about Catalyst Managed Services

If you’d like to explore our managed service options and discover how we support other enterprise level clients, we’d love to hear from you.